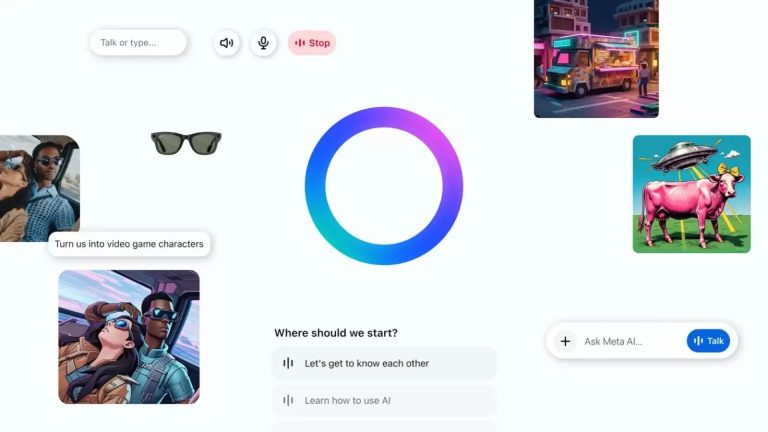

Meta has launched a new standalone AI app powered by its latest language model, Llama 4, offering users a more personal and voice-driven digital assistant experience. The app, now available in select countries, marks Meta’s first major step towards building an AI that is not only conversational but also more deeply integrated with its family of products including WhatsApp, Instagram, Facebook and Messenger.

Voice Conversations Take Centre Stage

The highlight of the new Meta AI app is its voice-first interface. Designed to make interactions more seamless and natural, users can now speak directly to the AI rather than type. Meta has introduced full-duplex speech technology in a demo mode, allowing the assistant to talk back in real-time, without waiting for the user to finish speaking. This demo can be toggled on or off and is currently available in the US, Canada, Australia, and New Zealand.

Unlike typical voice assistants that read pre-written responses aloud, Meta AI generates voice responses in real-time, mimicking natural dialogue. However, since this is an early version, users might encounter glitches or delays, and Meta is encouraging feedback to help refine the experience.

Smarter and more personal

According to Meta, built using Meta’s Llama 4 model, the app promises more relevant, helpful and context-aware responses. Meta AI learns from user interactions and can remember preferences when given permission. It also draws from data already shared on Meta platforms — such as Facebook likes or Instagram follows — to deliver answers that reflect individual interests.

For example, if a user enjoys travel and language learning, Meta AI can tailor recommendations and ideas based on these topics. Users in the US and Canada will be the first to access personalised answers through this new system.

Integration with Meta ecosystem

One of the key strengths of the Meta AI app is its integration across Meta’s suite of products. Whether a user is browsing Instagram, chatting on Messenger, or catching up with friends on Facebook, the AI assistant is always within reach. It is also accessible through Ray-Ban Meta smart glasses, which now work in sync with the app.

Meta is combining the new AI app with the existing Meta View app used for managing Ray-Ban smart glasses. Once users update to the new app, their settings, media, and connected devices will automatically transfer to a dedicated Devices tab. This integration allows users to start a conversation on their smart glasses and continue it later on the app or the web.

Discover feed and community sharing

The Meta AI app introduces a Discover feed where users can browse popular prompts, share their own AI-generated content, and remix ideas shared by others. Nothing is posted publicly unless a user chooses to share it. This aims to foster a sense of community around AI usage and spark creativity among users.

Enhanced web experience

Meta is also claiming to upgrade the web version of its AI assistant. It now supports voice interactions and features a redesigned image generation tool with customisation options for style, lighting, and mood. In selected regions, Meta is testing a new document editor that allows users to create image-rich documents, export them as PDFs, and even import existing files for AI analysis.

User control remains central

Meta says the new app puts users in control. A visible icon will always indicate when the microphone is in use, and settings allow users to turn on a “Ready to talk” feature for hands-free communication. Privacy remains a key consideration, with features allowing users to manage what data is used and what Meta AI can remember.